The conversation around what is responsible AI has gained significant momentum across industries, yet a universally accepted definition remains puzzling. Often, responsible AI is seen merely as a way to avoid risks, but its scope is much broader. Moreover, it not only involves mitigating risks and managing complexities, but also using AI to transform lives and experiences.

According to Accenture, only 35% of consumers worldwide trust how organizations are implementing AI. On the other hand, 77% believe that companies should be held accountable for their misuse.

So, in this context, AI developers are urged to adopt a robust and consistent ethical AI framework. Likewise, responsible AI is not just a buzzword anymore; rather it is a framework that ethically guides the development and use of AI across industries.

Therefore, this blog explores what is responsible AI, offering insights into its principles and practical implementation for ethically-oriented businesses.

What is Responsible AI (RA) – How It Works?

Responsible AI (RA) is a practice of developing and deploying AI systems that are ethical, transparent, and accountable. Also, it ensures AI alignment with societal values, respecting human rights, and promoting fairness while mitigating risks and unintended consequences.

In addition, responsible AI ensures trustworthy AI systems that are beneficial to all the stakeholders involving developers to users.

Now, as we already started to gain some understanding of what is responsible AI; Its time to have a look at how responsible AI works.

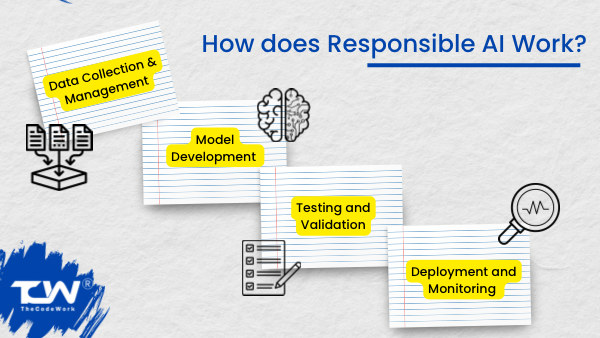

With that said, here’s a detailed breakdown of it segmented into key phases:

Data Collection and Management

Responsible AI starts with ethical data collection, respecting privacy and consent, and ensuring data represents affected populations. Also, data collection should be transparent, with clear communication to users about its intended use.

Now, to prevent AI systems from perpetuating biases, responsible AI requires scrutinizing data sources to identify and address potential biases. Accordingly, techniques like diverse sampling and bias audits can help in ensuring data fairness and avoiding biases.

Model Development and Training

During model development, responsible AI practices focus on designing algorithms that promote fairness and inclusivity. Likewise, it includes selecting features and designing models in a way that minimizes bias and ensures equitable treatment of all individuals. For instance, techniques like explainable AI (XAI) are used to create models that provide understandable reasons for their predictions.

Testing and Validation

It includes specific procedures for detecting and mitigating biases in AI models. For example, fairness metrics and impact assessments are used to identify any unintended biases and make necessary adjustments. Also, it involves analyzing how the AI system performs in various real-world scenarios, including edge cases and potential misuse cases.

Deployment and Monitoring

When deploying AI systems, responsible AI emphasizes transparency in communicating how the system will be used and its potential impacts. Likewise, it includes providing clear information to users about how the AI system operates and the decisions it makes.

Once deployed, AI systems are continuously monitored to ensure they operate within ethical boundaries and perform reliably. Also, it requires setting up real-time monitoring systems to track the AI’s performance and detect any issues that arise.

Overall, responsible AI works by integrating ethical considerations into every phase of AI development lifecycle, from data-collection to deployment. Now, speaking of AI lifecycle – You may check out our AI Development Services.

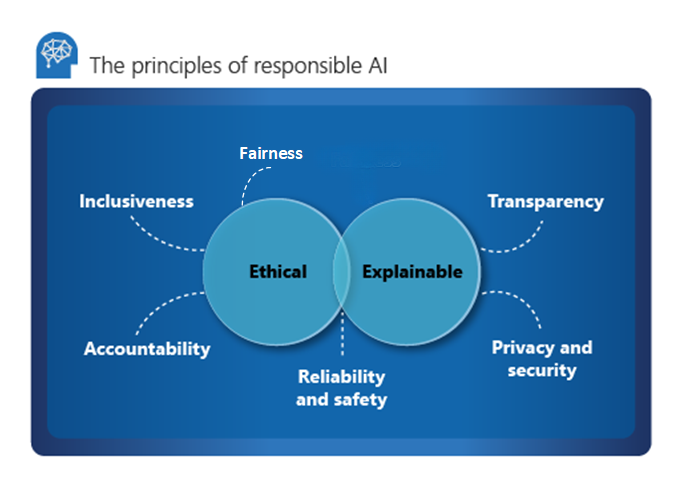

Principles of Responsible AI (RA)

Responsible AI is guided by core principles designed to ensure that systems are developed and deployed ethically and fairly. So, as a part of understanding what is responsible AI- Its crucial to understand the core principles that help addressing the challenges and risks associated with AI.

Accordingly, here are the fundamental principles of Responsible AI:

- Fairness

AI systems should be designed to ensure equitable treatment for all individuals and groups. It involves identifying and addressing biases in algorithms to prevent discrimination based on race, gender, age, or other protected characteristics. Besides, achieving fairness in AI requires actively detecting and mitigating biases in both AI models and training data.

Hence, businesses must use diverse datasets and employ techniques to correct any imbalances or biases that may arise.

- Transparency

Undoubtedly, AI systems should offer clear and understandable explanations for their decisions and recommendations. Moreover, it is crucial that users and stakeholders can comprehend how and why AI systems arrive at specific conclusions. As a result, it will foster trust and clarity among users.

- Privacy

It goes without saying that protecting user privacy is a fundamental principle of Responsible AI. Undoubtedly, AI systems must securely handle personal data, ensuring collection, storage, and processing comply with privacy laws and regulations. Furthermore, this commitment to data protection is essential for maintaining user trust and safeguarding sensitive information.

Also, users should retain control over their personal data, including the ability to provide informed consent for its collection. Additionally, responsible AI practices involve respecting user preferences and privacy choices, ensuring that data usage aligns with their expectations.

- Human Oversight

Human oversight in AI systems involves reviewing the outcomes to ensure they are fair and aligned with ethical principles. Subsequently, it includes checking AI decisions for accuracy and appropriateness before finalizing them, helping to prevent errors and ensure reliability.

Also, incorporating human oversight allows for ethical judgment to be applied in situations where AI faces complex or nuanced scenarios. Hence, human intervention and moral reasoning are crucial for addressing these situations, ensuring that decisions align with ethical standards.

- Inclusivity

Engaging diverse stakeholders from various backgrounds is essential for designing AI systems that address a broad range of needs. Additionally, AI systems should be designed to be accessible to individuals with varying abilities and needs. Consequently, it involves incorporating accessibility features and ensuring that AI is usable by all individuals, promoting inclusivity and equitable access.

- Sustainability

Sustainability in AI also includes considering the long-term effects of AI systems on society and the environment. Likewise, responsible AI practices involve assessing and addressing the broader implications of AI technologies for future generations. As a result, it ensures that AI development practices support lasting positive outcomes.

All in all, in the quest of understanding what is responsible AI – We can clearly see how these principles offer businesses a framework for developing systems that are ethical and beneficial to users. Also, by adhering to these principles, businesses can ensure that their AI technologies contribute positively to the world.

How Do You Design Responsible AI?

Designing Responsible AI involves integrating various ethical principles and best practices into every stage of the AI development lifecycle. Likewise, businesses need to ensure that their AI systems operate with fairness, transparency, accountability, and are aligned with societal values.

So, here’s a comprehensive guide on how to design Responsible AI for businesses:

- Define Ethical Objectives and Scope

Start by defining the ethical goals and scope of your AI project. Plsu, assess its societal impact, potential risks, and the values it should uphold. In addition, consider consulting with stakeholders to grasp their concerns and expectations.

- Assemble a Diverse Team

Assemble a multidisciplinary team with varied backgrounds, such as ethicists, domain experts, data scientists, engineers, and representatives from affected communities. Consequently, this diversity aids in spotting and addressing potential biases and ensures a thorough approach to ethical design.

- Conduct Ethical Impact Assessments

Then, conduct ethical impact assessments to identify potential risks and unintended consequences of the AI system. Also, assess how it might affect various stakeholders and explore scenarios where it could cause harm or perpetuate biases.

Besides, use the risk assessment findings to create strategies for addressing potential issues. Subsequently, this involves designing algorithms to reduce bias, implementing strong data governance practices, and ensuring transparency in decision-making.

- Ensure Data Integrity

Gather data ethically by ensuring it is representative, relevant, and obtained with proper consent. Also, you must avoid using data that could reinforce biases or infringe on privacy rights. Then, establish processes to maintain data quality and integrity in the life cycle.

However, in today’s data rich landscape businesses face certain challenges like data silos, quality issues and other complexities; Especially in gaining operational efficiency with data integration practices. So, to avoid such issues you may check out our guide on Data integration and how it accelerates business growth.

- Design Fair and Transparent Algorithms

Develop algorithms with a focus on fairness and transparency. Afterwards, utilize techniques like fairness-aware machine learning and explainable AI to ensure that the system’s decisions are equitable and understandable.

Then, incorporate features that allow the AI system to offer clear and understandable explanations for its decisions. Consequently, this will help users and stakeholders grasp how the system operates and fosters trust in its outputs.

- Implement Human Oversight

Incorporate human oversight measures into the AI system, particularly in high-stakes applications. It involves having human experts review and validate AI decisions to ensure they meet ethical standards and prevent errors.

Then, set up processes to review AI-generated decisions and outcomes. Moreover, consider having mechanisms for human intervention when needed and establishing feedback loops.

- Test and Validate Thoroughly

Now, its time to perform extensive testing of the AI system to assess its performance, fairness, and robustness. Also, evaluate the system using diverse datasets and various scenarios to ensure it functions correctly and avoids harmful outcomes.

Furthermore, conduct audits to examine the AI system’s impact on different demographic groups.

Overall, designing Responsible AI requires integrating ethical considerations, diverse perspectives, and best practices into every stage of the AI lifecycle. Moreover, for tailored support in implementing these practices, consider partnering with TheCodeWork to help guide your AI initiatives towards success.

Overall, designing Responsible AI involves integrating ethical considerations, diverse perspectives, and best practices into every stage of the AI lifecycle. Hence, a proper understanding of what is responsible AI is key to this process. Moreover, for tailored support in implementing these practices, consider partnering with TheCodeWork to help you guide your AI initiatives.

Implementation Strategies of RA

Now that we have a comprehensive view of what is responsible AI; Let’s have a look at the requirements of implementing Responsible AI that aligns with the business’s goals and values. Therefore, here are some strategies for effectively implementing Responsible AI for businesses:

- Leadership Commitment: Business leaders must be committed to ethical AI practices and set the tone for the rest of the company. Likewise, it includes allocating resources and establishing a culture of responsibility.

- Ethical AI Teams: Create dedicated teams or committees focused on ethical AI practices. Moreover, these teams should include experts from various disciplines, such as ethics, law, technology, and social sciences. As a result, they will provide a holistic perspective on AI development.

- Training and Awareness: Offer training and awareness programs to educate employees on the significance of Responsible AI and how to apply ethical principles. Hence, it helps in ensuring alignment with the organization’s responsible AI goals.

- Ethical AI Policies: Develop and enforce ethical AI policies that outline the organization’s commitment to Responsible AI. Besides, these policies should cover areas such as data privacy, bias mitigation, and accountability.

- Third-Party Audits: Conduct third-party audits of AI systems to ensure compliance with ethical standards and identify any potential issues. Also, External audits provide an unbiased assessment of the organization’s AI practices.

- Transparency Reports: Publish transparency reports that provide insights into the organization’s AI practices, including how ethical considerations are being addressed. Moreover, transparency reports help build trust with stakeholders and demonstrate the company’s commitment to Responsible AI.

So, by adopting these strategies – Establishing clear ethical guidelines and integrating responsible practices into development. Businesses can ensure that they are on the right path to leverage responsible AI efficiently.

Case Studies

Examining real-world examples of Responsible AI provides valuable insights into what is responsible AI and how ethical principles are applied. So, here are some of the best case studies that highlight crucial aspects of Responsible AI:

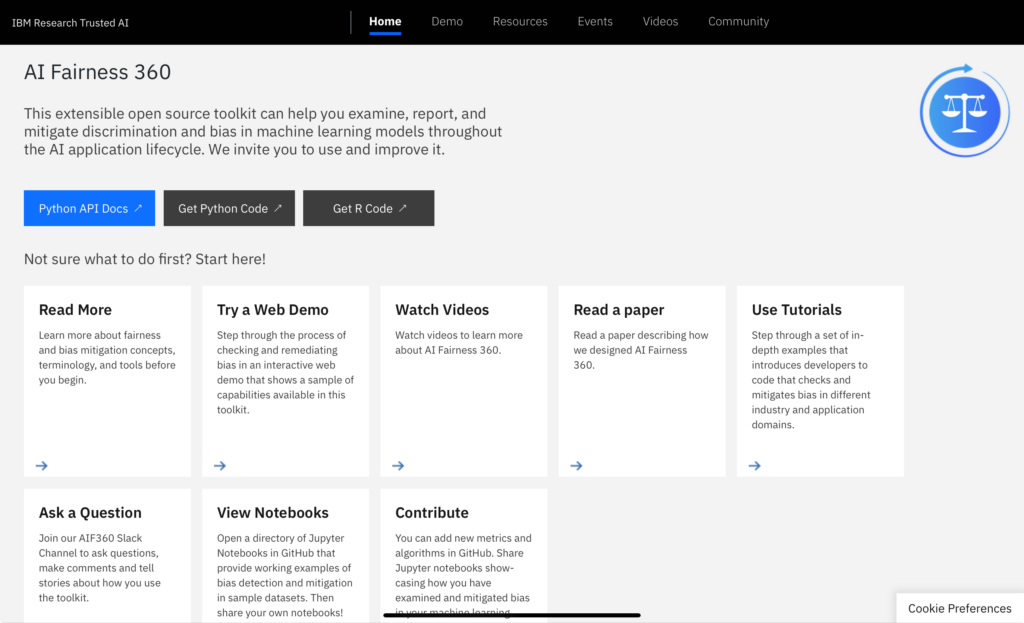

- IBM’s AI Fairness 360 Toolkit

IBM developed the AI Fairness 360 (AIF360) toolkit to help organizations identify and mitigate biases in AI models. Moreover, this open-source toolkit includes a comprehensive set of algorithms, metrics, and visualizations for assessing fairness in machine learning models.

Key Features:

- Bias Detection: AIF360 provides tools for detecting biases in datasets and models, using various fairness metrics.

- Bias Mitigation: The toolkit includes techniques for mitigating bias during the preprocessing, in-processing, and post-processing stages of model development.

- Transparency: Detailed documentation and tutorials help users understand and apply fairness techniques effectively.

The AIF360 toolkit has been widely adopted by organizations and researchers to enhance the fairness of AI systems. Hence, by offering practical tools and resources, IBM has helped the AI community address bias and advance responsible AI practices.

- Microsoft’s AI Ethics Advisory Board

Microsoft established an AI Ethics Advisory Board to provide guidance on ethical issues related to AI development and deployment. Likewise, the board consists of external experts in AI ethics, law, and social justice.

Key Features:

- Expert Guidance: The board offers independent advice on ethical challenges, ensuring that AI systems align with societal values and regulatory requirements.

- Transparency: Microsoft publishes reports and updates on the board’s activities and recommendations, promoting transparency in AI governance.

In brief, the AI Ethics Advisory Board has helped Microsoft navigate complex ethical issues and implement responsible AI practices. Plus, the board’s recommendations have informed the company’s AI policies and contributed to its commitment to ethical AI development.

- The Gender Shades Project by MIT

The Gender Shades Project, led by MIT researcher Joy Buolamwini, examined the gender and racial biases in facial-recognition systems. Consequently, the project revealed that these systems exhibited significant disparities in accuracy based on gender and skin color.

Key Features:

- Bias Detection: The project assessed the performance of facial recognition systems across different demographic groups, highlighting disparities in accuracy.

- Advocacy for Change: Moreover, the findings led to increased awareness of bias in AI and spurred efforts to improve the fairness of facial recognition technologies.

Consequently, the Gender Shades Project contributed to the development of more inclusive and accurate facial recognition systems. Also, it influenced major tech companies to address bias and improve the performance of their AI systems for diverse populations.

- The Ethical AI Initiative at The Alan Turing Institute

The Alan Turing Institute in the UK launched an Ethical AI Initiative to explore and address ethical issues in AI research and applications. Likewise, the initiative focuses on developing frameworks, guidelines, and tools for responsible AI.

Key Features:

- Research and Frameworks: The initiative conducts research on ethical AI and develops frameworks for integrating ethical considerations into AI projects.

- Collaborative Approach: Also, the Institute collaborates with academic, industry, and policy stakeholders to advance the field of ethical AI.

Accordingly, the Ethical AI Initiative has contributed to the development of best practices and guidelines for responsible AI. Meanwhile, it has also facilitated collaboration between researchers, policymakers, and industry professionals to promote ethical AI practices.

In summary, these case studies highlight the diverse approaches to implementing Responsible AI in practice. By examining different strategies and real-world applications, businesses gain insight into what is responsible AI and how it can benefit them.

How Can TheCodeWork Help you?

At TheCodeWork, we are committed to supporting businesses in implementing Responsible AI practices extensively. Our expertise and solutions are designed to help you navigate the complexities of ethical AI development and deployment.

We can help you establish a robust ethical framework tailored to your organization’s needs. Likewise, it includes:

- Defining ethical guidelines

- Implementing fairness-aware machine learning practices

- Executing Bias Mitigation Strategies

- Placing Data Protection Measures

Our AI experts conduct comprehensive risk assessments to identify potential ethical issues and biases in your AI systems. Plus, we provide recommendations and strategies to mitigate these risks and enhance the fairness and transparency of your AI solutions. Therefore, collaborating with us will enhance your businesses impact and influence in the field of Responsible AI practices.

To know more, Contact Us Today!

Bottom Line

Summing Up, responsible AI is more than a regulatory mandate; it is a moral and ethical duty for businesses to develop and deploy AI systems. Understanding what is responsible AI emphasizes the need for practices that build trust among users. Likewise, the journey toward Responsible AI requires a steadfast commitment to ethical principles, learning, and flexibility to navigate evolving challenges.

Moreover, this ongoing dedication ensures that AI systems remain aligned with societal values and continue to serve the greater good.

FAQs

Q1. Why is Responsible AI important for businesses?

Answer: Responsible AI is crucial for businesses because it helps in building trust with customers, partners, and regulators. Also, by ensuring ethical AI practices, businesses can avoid legal risks, prevent harm to their reputation, and foster long-term sustainability. It also enhances the quality and fairness of AI-driven decisions, leading to better customer satisfaction and loyalty.

Q2. Can Responsible AI still be profitable?

Answer: Yes, Responsible AI can be highly profitable. Because, by adopting Responsible AI practices, businesses gain customer trust, improve decision-making accuracy, and reduce legal risks extensively – All of which contribute to long-term profitability. Ethical AI also opens up new market opportunities as consumers increasingly prefer companies that demonstrate social responsibility..

Q3. What role does regulation play in Responsible AI?

Answer: Governments and regulatory bodies are increasingly setting standards and guidelines for ethical AI use, particularly around data privacy, and transparency. Therefore, compliance with these regulations not only helps avoid legal penalties but also demonstrates a commitment to ethical practices. On the other hand, it enhances a company’s reputation and customer trust.

Q4. What are the potential risks of not adopting Responsible AI?

Answer: Failing to adopt Responsible AI leads to severe reputation damage, legal consequences, and loss of customer trust. Also, public backlash may occur if AI systems cause harm or discrimination, while legal risks arise too due to data breach. Consequently, customers may abandon businesses that have no AI ethics in practice.